It’s self evident to say that taking an exam is mentally taxing, but the physiological effects of answering exam questions are a news flash. In his book Thinking Fast and Thinking Slow psychologist Daniel Kahneman cites studies showing that our nervous system draws down the glucose in our blood when we are doing complex mental activity like test taking—just as we would from jogging. We become tired. And that makes us more prone to rely on impulse and gut feeling when we should be thinking through our answers. There’s a quick fix to this problem. Eat something. After 30-40 minutes of working through questions on the exam, snack to restore your energy levels. By doing that, you’ll be more deliberate during the exam, and your answers will more likely reflect what you do know.

Category Archives: Educational Psychology

Bar Students Can Reset Goals to Counteract Negative Thoughts About Performance

Practicing bar study questions can bring a riot of emotions. Bar students rejoice when they get a high score on a practice essay or MBE set, but despair when they get a low or middling score. Psychologists have found that how students react to a less-than-stellar performance is affected by the kind of goals students set for themselves.

Students who focus on performance goals –e.g., getting a certain score on a practice test— generate negative thoughts from a mediocre or poor result. These students ask the question “What is my current ability?” “Is my ability adequate?” From this orientation, these learners compare their performance to others or some standard, start to believe there is nothing they can do to improve (learned helplessness) and avoid challenging tasks that could help them grow and learn more. And a vicious spiral could ensue leading to a self-fulfilled prophesy of under-performance.

Psychologists say that a learning or process goal is better. Students who adopt a learning or process goal take the long view of learning. They ask, “How can I best acquire skills or master tasks over a period of time?” Students with this goal orientation don’t take a poor performance on a practice test as a defeat but as an opportunity to improve their skill. A poor performance is seen as a source of information on what aspects of their skill need improvement. They look back at their poor performance, figure out what skills or areas they need to work on, and change their strategies and work harder. Students with a process or learning goal orientation have greater self-efficacy (a perceived self-confidence to a specific task), exert greater effort, and persist against adversity. They are risk takers who seek out challenging problems—which, in turn, build their skills.

The takeaway is that it’s better to focus on learning or process goals. You should set goals to work on techniques or strategies or knowledge areas that will improve your bar exam test-taking abilities. If it’s hard for you to know what exact skills, strategies or abilities you need to work on after a disappointing performance on a practice set, then ask your bar mentor or bar coordinators for help. Remember, if you focus on process or learning goals, you’re less likely to let the anxiety, fear or self-doubt creep in. Setting a learning or process goal should be your reset button to click when you start to feel badly about a low score on a practice exam. Doing this will help you reframe an unhappy result into a positive springboard for improving!

(Note: Based on Carol Dweck’s research at Harvard’s Laboratory on Human Development and later at Yale. I’ve omitted citations to her classic study as well as the vast research literature on the effects of different kinds of goals on human learning and motivation.)

Filed under Educational Psychology

Too Early to Say that the Pen is Mightier than the Keyboard

Recently there was an article that captured the attention of the popular press and those who teach. A few months ago, The Atlantic trumpeted, “To Remember a Lecture Better Take Notes by Hand .” Scientific American also got into the act with the article “A Learning Secret: Don’t Take Notes with Your Laptop”. Even the research article upon which these news reports were based had a catchy title, “The Pen is Mightier than the Keyboard: The Advantages of Longhand over Laptop Notetaking.” Soon education listserves began to advocate banning the laptop from the classroom. What’s not to like about this finding that fits into our sneaking suspicions about the digital devices? There is much to admire about the Mueller and Oppenheimer (23 April 2014) study that found handwritten notes were superior to laptop notes; it’s a tightly constructed study. Based on the Mueller article, should educators be telling students not to use their laptops for notetaking?

Let’s step back for a moment. Isn’t it a bit rash to conclude from a single experimental study that all note-taking by hand is always better than notetaking from a digital device? Just because a research finding seems plausible or agreeable doesn’t mean that the finding is valid or generalizable.

What is the evidence for superiority of handwritten notes to laptop notes? Mueller, et al. (2014) found that students who took notes by hand did better on reading comprehension tests than those taking notes on a laptop, that the laptop notes tended to be verbatim transcripts of the lecture and that the handwritten notes maintained their superiority even after the laptop students were directed not to write verbatim notes. Is this study enough for us to advise students to write notes only by hand?

Rarely does one single study prove a hypothesis. This point is made by educational psychologists Stanovich and Stanovich (2003) who write about:

[T]he mistaken view that a problem in science can be solved with a single, crucial experiment, or that a single critical insight can advance theory and overturn all previous knowledge. This view of scientific progress fits nicely with the operation of the news media, in which history is tracked by presenting separate, disconnected “events” in bite-sized units. This is a gross misunderstanding of scientific progress and, if taken too seriously, leads to misconceptions about how conclusions are reached about research-based practices.

One experiment rarely decides an issue, supporting one theory and ruling out all others. Issues are most often decided when the community of scientists gradually begins to agree that the preponderance of evidence supports one alternative theory rather than another. Scientists do not evaluate data from a single experiment that has finally been designed in a perfect way. They most often evaluate data from dozens of experiments, each containing some flaws but providing part of the answer. (Emphasis supplied.)

Thus, it’s too early to conclude that laptop notes are inherently inferior to handwritten notes. At present, the studies are far too narrow or limited to generalize them to broad types of notetaking from all kinds of lectures. The lectures in the Mueller study were based on TED talks, which were 15-20 minute lectures on “topics that would be interesting but not common knowledge.” By contrast the lectures that are encountered in college as well as in law school synthesize readings students had earlier been assigned. It’s a big leap to argue that laptop notetaking is inferior to all types of lectures.

Even if the results from the Mueller study could be replicated, that doesn’t necessarily mean that the laptop disadvantage is immutable. An alternative explanation for the Mueller results is that students find laptops to be easier to type and that this greater typing facility induces students to type exactly what they hear. If that is the case, laptop students will have to learn how to process their notes in real time during the lecture or in the time after the lecture.

The Mueller findings have to be replicated by other scientists, and the experiment has to be expanded to cover other types of lectures and situations. More studies—involving different subjects, different source-lectures and lectures that resemble the actual lectures occurring in higher education classrooms–need to be performed. Until other studies confirm the Mueller findings and extend it to broader types of classroom lectures, scientists cannot yet conclude that laptop notetaking is inferior to notetaking by hand.

What can or should we tell students concerning laptop notetaking? We can say that based on a single study, in which students listened to short 15-20 minutes lectures on general topics, laptop notes tended to be more verbatim than handwritten notes. We can tell students that there is a viable yet unproven hypothesis that there is a disadvantage. Yet it is too early to conclude that there is a laptop disadvantage, too early to say what exactly is the basis for the disadvantage and too early to say whether such a disadvantage can be overcome by training.

Caution should rule the day. Recent history is populated with examples of the public –and even scientists—leaping to conclusions from a sparse number of studies. That was the case several years ago, when initial studies had indicated that beta-carotene had cancer-fighting properties. After more studies were done, the scientific community later rejected the initial finding.

It could very well be that laptop notetaking is inferior to long-hand notetaking, but as it stands now, the body of evidence supporting that hypothesis does not exist.

Filed under Educational Psychology

How to Make Test-Enhanced Learning Work in a Law School Classroom

In the public’s mind–and those in the legal academy are a part of that—there has been a growing chorus of criticism that there has been too much testing, and that this over-evaluation leads to student anxiety and too much educational bean counting. So when there were report of studies – showing that frequent testing might be good for students—many heads were turned.

What’s important to understand is about these studies were about low-stakes practice tests aimed to help learners—compared to the “high stakes” one-time only exams like finals or midterms (or standardized exams) that have a huge impact on how a student is graded or labeled.

Frequent in-class testing can enhance learning in a law school classroom but it needs the structural supports to make it effective. Several recent studies have established the benefits of test-enhanced learning; they include Pennebaker, J.W., Gosling, S.B., & Ferrell J.D. (2013) Daily Online Testing in Large Classes: Boosting College Performance while Reducing Achievement Gaps. PLoS ONE, 8(11). and Roediger, H.L., Agarwal, P.K., McDaniel, M.A., and McDermott, K.B. (2011). Journal of Experimental Psychology, 17(4), 382-395.

Roediger et al.(2011) showed that middle school students in a social studies class performed better when they were given frequent low stakes quizzes. In that study, students were given online multiple-choice tests two days after each lesson was taught. They used clickers to select an answer to a question presented on screen and then the question stem and correct answer would appear on a large screen.

So if low-stakes exams aren’t really bad for you, how exactly are they beneficial to learning?

One possible theoretical explanation for the benefits of test-enhanced learning is that the extra exposure to the material–it’s not the technique but it’s the extra practice that students engage in because of an impending test. But this theoretical explanation can be eliminated because Roediger’s study showed that the frequently tested group did better than students who had been told to study more.

Another explanation is based on the “transfer appropriate processing” theory, which posits that that benefits occur when the format of the practice matches the test upon which the students are assessed. Mirroring the type of questions on the final exam facilitates “fluent reprocessing” of the information needed to answer the questions on the final test. For example, if the final test consists of multiple-choice questions, the practice tests must also be multiple-choice. Likewise, if the final test consists of questions asking for an essay answer, the practice tests must also be essay-type questions. By practicing with matching tests, there are stronger neural connections which later help on an exam.

Techniques based on transfer appropriate processing are in wide use. For example, much of bar exam preparation is premised on it. Bar prep consists of constant practice with multiple-choice questions as preparation for the Multistate Bar Exam as well as essay writing practice. Likewise, law students typically practice for final exams by mirroring the type of questions expected on a final or midterm. In short, practice is better than not practicing, but practicing with questions that match the format of final exam questions—is even better.

Practicing with matching questions is good but practicing with feedback is superior because the feedback tells learners what they need to fix. Practice does not make perfect if practice doesn’t lead to corrections in performance; without feedback, practice may simply reinforce imperfections. A first year law student can practice hours briefing a case, but without feedback, the student will continue making the same mistakes.

As good as practice with feedback is, feedback is effective only if the student knows how to employ the feedback and if the student is motivated to use the feedback. Motivation is what makes us humans and not robots. A self-correcting machine is programmed to take in feedback and make appropriate changes. But any human has to feel motivated to use the feedback and take corrective action. Corrective feedback can be perceived as negative–if the student has pre-existing low opinion about his or her ability and believes there is nothing s/he can do to improve; in that instance, feedback may simply be taken as a negative, thus leading to a downward spiral of lower motivation, effort, persistence—and ultimately poor performance which in turn inspires even lower confidence, motivation, and effort.

This means that educators have to make sure that the right conditions exist for students to learn when they make mistakes. In short, legal educators need to maintain relatively high self-efficacy. Psychologist Albert Bandura who developed the concept, defined perceived self efficacy as “beliefs in one’s capabilities to organize and execute the courses of action required to produce given attainments.” Self-efficacy shouldn’t be confused with self-esteem; self-esteem is a wide-ranging perception about one’s self-worth whereas perceptions of self-efficacy are contextual –they may be different from one task to a different one—e.g., “I may have high self-efficacy when it comes to playing tennis, but low self-efficacy for playing chess”

There is abundant research showing that moderately high levels of self-efficacy are associated with academic risk taking, persistence, and effort needed to become a good learner. Teachers can promote self-efficacy through low-stakes practice tests—tests that have small impact on a student’s grade—and emphasizing the mastery of skills and understandings—rather than emphasizing doing better than others. Psychologist Carol Dweck and others have long established that a “learning goal orientation” which focuses on increasing competence promotes high persistence and effort while a performance goal orientation –which focuses on performance relative to others –fosters lower effort and persistence when students start to make mistakes.

There is well-established research that tells us that having a learning goal orientation is especially important because of the attributions we make when we fail. With a learning goal orientation, we will attribute failure to not being able to use the skills that are connected to the performance outcome; for example, a student with a learning goal orientation will attribute a poor outcome on a law school final to, say, not being able to fully apply the legal rule to a case. By contrast a law student with a performance goal orientation will focus on the low grade on a final exam, and how much lower the grade relative to everyone else. That student is more likely to believe that her abilities are innate and fixed—“I’m just not any good at doing this lawyer stuff.”

Law school instructors can build self-efficacy by establishing learning environments that focus on building specific skills and emphasizing the fact that there are different strategies one can use to improve performance.

In summary, frequent practice tests can enhance learning in the law classroom; but law school instructors can optimize the benefits from frequent testing by doing the following:

- First, the practice tests need to be low stakes. Low stakes because students need stakes to exert effort. But the stakes can’t be too high – since that would undermine student self-efficacy.

- Second, instructors need to give practice tests frequently and the practice tests need to match the type of questions that will be asked on the final. Of course there has to be a readily available bank of these tests for this to be feasible.

- Third, feedback needs to be immediate. Instructors can do that by presenting the right answer to the questions they answered. Immediate feedback on multiple choice questions is easy to administer, but feedback on essay type answers is hard to generate. Instructors may have to rely on collaborative learning techniques to administer feedback on answers to practice essay questions.

- Fourth, students need to be trained so that they can use the feedback to identify deficits in their performance and they need to be trained in what strategies they can employ to improve their performance. For instance, if a pattern of errors shows that the student is overgeneralizing the rules from a case decision, then the student needs to re-calibrate her interpretation.

- Fifth, students have to be motivated to fix the things they may be doing wrong—learning environments must build self-efficacy so they put in the effort and persist in the face of difficulties. To do this, teachers have to promote a learning goal orientation instead of a performance goal orientation.

- Sixth, teachers need to be aware of whether other courses entail frequent testing. The Roediger study showed benefits when there was one single course with frequent testing but it is doubtful that frequent testing in more than one course would be helpful. The student stress of having to prepare that much more for a second, third or fourth course with frequent tests seems counterproductive.

Also law students can do the following:

- Students should seek out as many opportunities to test themselves using practice exams that match the type of questions on a final or midterm exam. They should put in the effort to answer the questions so that they truly test themselves and get an honest assessment of what they know and don’t know. Multistate bar exam questions are good sources of multiple-choice questions and many law faculty make available past essay exams containing essay questions.

- Students should compare their answers to an answer key available for Multistate Bar Exams.

- Students themselves should compare the answers and assess where the errors are occurring in order to make adjustments. They should avail themselves of academic support staff in making these assessments.

- Students should keep in mind that there is always room for improvement; students should be realistic that small incremental steps are more likely to improve achievement.

All in all, frequent testing can work in a law school classroom, but instructors and students need to create the right conditions that allow for feedback and motivation to use the feedback to improve performance.

Suggested books and articles for further reading

Bandura, Albert. (1997). Self-efficacy: The exercise of control. (New York: W.H.Freeman).

Dweck, C.S. (1986). Motivational processes affecting learning. American Psychologist, 41, 1040-1048.

Zimmerman, B.J. (2000). Self-efficacy: An essential motive to learn. Contemporary Educational Psychology, 25, 82-91.

Filed under Educational Psychology

Data Offered to Support ABA Proposal to Change Bar Pass Requirement Raises More Questions than It Answers

About two weeks ago, a lawyer friend of mine asked me to look at the statistics submitted in the April 26-27, 2013 minutes of the ABA’s Section of Legal Education and Admissions to the Bar Standards Review Committee (“Standards Committee”). The worn out joke about lawyers is that they went to law school because they hate or couldn’t do statistics. But I am a lawyer, I like statistics and a significant part of my doctoral training was in statistical models. When I reviewed the Standards Committee presentation of the data, I became dazed and confused.

About two weeks ago, a lawyer friend of mine asked me to look at the statistics submitted in the April 26-27, 2013 minutes of the ABA’s Section of Legal Education and Admissions to the Bar Standards Review Committee (“Standards Committee”). The worn out joke about lawyers is that they went to law school because they hate or couldn’t do statistics. But I am a lawyer, I like statistics and a significant part of my doctoral training was in statistical models. When I reviewed the Standards Committee presentation of the data, I became dazed and confused.

The statistics were offered in support of the Standards Committee proposed to change the bar passage requirement for the accreditation of law schools. Under Proposed Standard 316, 80% of a law school’s graduates must pass the bar within 2 years (5 tries) (this is called the “look-back period”). Under the current rule, there’s a 75 % pass rate that must be achieved within 5 years (10 attempts) of graduation. This change has stirred controversy because of its potential impact on non-traditional students, particularly students of color.

The proposed changes are based on a study of past examinees, and it’s important to review them before you make up your mind.

In support of the proposed changes, the Standards Committee musters up a slender thread of evidence—that the overall bar pass rate for the past five bar exams have ranged between 79% and 85%. Opponents have jumped all over this morsel of evidence. Readers can see these objections expressed in a letter to the Standards Committee from the Chair of the ABA Council for Racial and Ethnic Diversity in the Educational Pipeline

This posting takes a closer look at the data to support the ABA proposal for the shortening of the look-back period from the existing five years (10 tries) to two years (5 tries).

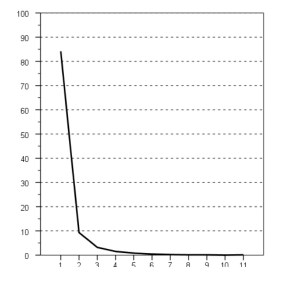

The Figure 1 (shown below) was used to support the Standards Committee’s proposal, and it warrants a careful look because it is very similar to the other graphs the Committee used to support its proposal. The figure shows a line graph in which the x-axis stands for the number of attempts at taking the Multistate Bar Exam (MBE) and the y-axis represents the percentage of examinees. The reader get the general impression that there is a big drop in the number of repeaters after the first attempt. The descent flattens out and starts to approach zero at the fifth and later attempts. This general impression seems to support the Proposed Section 316 to shorten the look-back period to 2 years (5 attempts), since the number of examinees seems infinitesimal after five attempts.

Figure 1. Percentage of Examinees Taking the MBE One or More Times(N=30,878; 1st attempt in July 2006)

Percent taking

Number of Times Taking the MBE

But upon closer examination, it’s hard to make sense of what this figure is depicting.

It is said that a picture paints a thousand words, but this graph –like other graphs the Standards Committee used in its report–raises a thousand questions.

- Figure 1 refers to 30,878. It is difficult, if not impossible, for the reader to tell what the 30,878 stands for.

- Does 30,878 represent the number of people who took exam the first time in July 2006 and those who re-took the exam again during the July 2006-July 2011 period?

- Where does the number 30,878 come from? Is it the total number of examinees from ABA law schools or does it include examinees from non-ABA law schools?

- Are the 30,878 a sample of a larger population of examinees? If so, what is the size of this population?

- How was the sample chosen? What was the procedure for choosing the sample?

- Is this sample an accurate depiction of the population of examinees? What confidence can we have that that the conclusions about this sample apply to a larger group of people?

- Figure 1 refers to percentages. For example, in the first try, there’s 84%; 2nd try, 10%, etc.

- What numbers do these percentages refer to? 30,878? Different numbers of examinees who took the bar from July 2006 to July 2011? If so what are those numbers?

- There are also obvious questions that the Standards Committee did not ask:

- Are the repeaters from a wide range of schools? Do the repeaters disproportionately come from schools with large populations of graduates of color?

- What explains the low percentage of repeaters? Does the need to earn an income and opportunity cost of preparing for the exam suppress re-taking the bar?

- What is the behavior of the re-takers? Do they skip a year or take it for the next bar exam administered?

If the Standards Committee answered these questions, we would at least get a basic understanding of the repeater. It certainly isn’t too much to ask for this.

Are there plausible explanations for the Standard Committee’s numbers?

Yes, but, they aren’t in the Standards Committee April 26-27, 2013 minutes.

Could the 30,878 refer to the actual number of examinees who took the bar exam in July 2006?

No. An analysis of the National Conference of Bar Examiners’ own data shows that the 30,878 has to be a sample, not the actual population of examinees who took the July 2006 exam.

According to the NCBE statistics, there were 47,793 first-time takers of the July 2006 bar exam compared to 30,878 examinees in Figure 1. Could the 30,878 be those who were from only ABA law schools? The NCBE did not publish a breakdown of the examinee’s law schools so it’s hard to compute an exact number examinees from ABA schools. However a pretty good estimate can be derived. For both the February and July 2006 bar exams, there were 9,056 examinees from non-ABA law schools, law schools outside the U.S., and from law office study. If you assumed that all of these 9,056 non-ABA examinees took the July 2006 bar exam and subtracted this number from 47,793, you would get a low-end estimate of how many July 2006 bar examinees were from ABA law schools—and that estimate is 38,737. Still even with that generous estimate, there is a 7,859 discrepancy (38,737-30,878) greater than a 25% difference between the two numbers. If the 30,878 includes students from non-ABA and ABA law schools, the discrepancy is even bigger.

The Takeaway

For a proposal as important as this, the Committee should have done a better job of providing detail on the data from their study and the methodology behind the study. That’s needed for anyone to be able to make an informed opinion about whether the shortening of the look-back period is a good or bad policy. They should have shown the analysis that would give confidence that the sample is not systematically biased and is reflective of the actual population of examinees.

Moreover, the study should have gone further and provided detail on the sample of examinees depicted in the study. Greater detail is needed on the make-up of the repeaters. If the repeaters came from a wide variety of law schools, that would bolster the Committee’s argument of no-harm; however if the repeaters come from law schools with above-average percentages of students of color, then a shortening of the look-back period would seem to undermine these school’s ability to meet the proposed higher ultimate bar pass rate.

No one is saying that the numbers are wrong or cooked—but there simply isn’t enough information to give us confidence about the conclusions. There are certainly possible plausible explanations to give sense to the numbers, and it’s very possible that further detail and explanation would allay the concerns laid out in this posting. With a proposed big change like Section 316, it’s important to see what those explanations and details are.

Filed under Educational Psychology, legal education

Testing the Test of Legal Problem Solving

A student preparing for the Multistate Bar Exam could liken the exam to posing 200 Rubik’s cube-type questions—okay, they’re not as hard as solving really hard puzzles–but examinees may sometimes feel that the MBE can be that difficult.

A student preparing for the Multistate Bar Exam could liken the exam to posing 200 Rubik’s cube-type questions—okay, they’re not as hard as solving really hard puzzles–but examinees may sometimes feel that the MBE can be that difficult.

Bonner and D’Agostino (2012) in their research study on the Multistate Bar Exam (MBE) test the test by asking:

• How important is a test-taker’s knowledge of solving legal problems to performance on the MBE?

• To what degree is performance on the MBE dependent on general knowledge, which doesn’t have anything to do with the law or legal reasoning? For instance, are test-wise strategies and common-sense reasoning important to doing well on the MBE?

Background

With thousands of examinees taking this exam annually since 1972, you would think that the answer is a resounding yes to the first and a tepid yes to the second. Why else would most of the states rely on a test if it were not proved valid? But surprisingly, there hasn’t been any published evidence that this high-stake exam does in fact “assess the extent to which an examinee can apply fundamental legal principles and legal reasoning to analyze a given pattern of facts” as one article characterized the MBE — in other words, the MBE tests skills in legal problem solving.

Establishing test validity is the worth of the any test; validity is not an all-purpose quality, for it only has reference to its purpose. Establishing test validity is not uncommon in other fields such as medical licensure, where there are studies establishing validity of medical clinical competence , internal medicine in training exam, and the family physician’s examination.

A finding that common sense reasoning and general test-wise strategies are important to MBE performance would in fact indicate that the test lacks validity as a measure of legal problem solving. There is indirect support that the general common sense and test-wise strategies aren’t important. In his review of research Klein (1993) refers to a study in which law students outperformed college students, untrained in the law, on the MBE. But we can’t conclude that common sense and test-wise strategies are critical for doing well on the MBE since maturation effects (just being older and smarter as a result) not legal training, cannot be ruled out as a reason for the superior performance of law students.

The Study

In devising a study to measure validity (construct and criterion) of the MBE, Bonner et al., (2012) drew on the very large body of research about novice-expert differences in problem solving. This research looks at the continuum of expertise suggested by these extremities and the spaces in between. Expert-novice studies compare how people with different levels of experience in a particular field of endeavor go about solving problems in that field. By doing this, scientists hope to see what beginners and intermediates need to do to go to the next level. Cognitive scientists have looked at these differences in many different areas—mathematics, physics, chess playing and medical diagnosis—but legal problem solving hasn’t got a lot of attention.

So what does this research tell us? Experts draw on a wealth of substantive knowledge to solve a problem in their area of expertise. They know more about the field and have a deeper understanding of its subtleties. An expert’s knowledge is organized into complex schemas (abstract mental structures) that allow experts to quickly hone in on the relevant information. Their knowledge isn’t limited to the substance of the area (called declarative knowledge); experts have better executive control of the processes they go about solving a problem. They have better “software” that allow them to use subroutines that weed out good and bad paths to a solution. In his article on legal reasoning skills, Krieger (2006) found that legal experts engage in “forward-looking processing.”

By contrast, novices and intermediates are rookies of varying degrees of experience in the domain in question. Intermediates are a little better than novices because they have more knowledge of the substance of a field, but their knowledge structures—schemas—aren’t as complex or accurate. Intermediates, though, are less rapid in weeding out wrong solutions and less efficient in honing in on the right set of possible solutions.

Bonner et al., classified law graduates as intermediates and devised a study to peer into the processes involved in answering MBEs. They anticipated that law school graduates needed an amalgam of general as well as domain-specific reasoning skills and thinking-about-thinking (metacognitive) skills to do well on the MBE.

Bonner used a “think-aloud” procedure –online verbalizations made during the very act of answering an MBE question– to peer into the students’ mental processes. A transcript of the verbalizations was then laboriously coded into types which, in turn fell into broader categories of legal reasoning (classifying issues, using legal principles and drawing early conclusions.), general problem solving (referencing facts and using common sense), and metacognitive (statements noting self-awareness of learning acts). The data was then evaluated with statistical procedures to see which of the behaviors were associated with choosing the correct answer on MBE questions.

Findings

Here are the results of Bonner’s and D’Agostino’s study:

• Using legal principles had a strong positive correlation (r= .66) with performance. When students organized decisions by checking all options and marked the ones of that were most relevant and irrelevant, they were more likely to use the correct legal principles.

• Using common sense and test-wise strategies had a negative, but not significant, correlation with performance. In fact test strategizing had a negative relationship with performance. Using “deductive elimination, guessing, and seeking test clues was associated with low performance on the selected items.”

• Among the metacognitive skills, organizing (reading through and methodically going through all of options) had a strong positive correlation (r= .74) with performance. (Note to understand the meaning of a correlation, square the r and the resulting number is the degree to which the variation in one variable is explained by the other; in this example, 55% of the variation in MBE performance is accounted for by organizing.) When students are self-aware and monitor what they are doing, they increase their chances for picking the right answers.

The Takeaway

The bottom line is that the MBE does possess relevance to the construct of legal problem solving –at least with respect to the questions on which the think-aloud was performed. In short, Bonner et al., demonstrate that the MBE has validity for the purpose of testing legal problem solving.

Unsurprisingly, using the correct legal principle was correlated strongly with picking the right answer. This means a thorough understanding and recall of the legal principles are all critical to performance. That puts a premium not only on knowing the legal doctrine well, but exposing yourself to as many different fact patterns will help students to spot and instantiate the legal rules to the new facts. Analogical reasoning research says that the more diverse exposure to applications of the principles should help trigger a student’s memory and retrieval of the appropriate analogs that match the fact pattern of the question. Students should take a credit bearing bar related review course, and they should take this course seriously.

If you want to do well on the MBE, don’t jump to conclusions and pick what seems to be the first right answer. The first seemingly right answer could be a distractor, designed to trick impulsive test-takers. Because of the time constraints, examinees are especially susceptible to pick the first seemingly right answer. But the MBE punishes impulsivity and rewards thoroughness– so examinees should go through all the possible choices and mark the good and wrong choices. They shouldn’t waste their time with test-wise strategies of eliminating choices based on pure common sense or deduction that has no reference to legal knowledge and looking for clues in the stem of the question. Remember, test-wise strategies unconnected with legal knowledge had a negative relationship to MBE performance.

Although errors in facts that result from poor comprehension were not prevalent among the participants in Bonner and D’Agostino’s study, that doesn’t mean that good reading comprehension is unimportant. If a student has trouble with reading comprehension, the student should start the practice of more active engagement with fact patterns and answer options as Elkin suggests in his guide.

Finally, more training in metacognitive skills should improve performance. Metacognition, which is often confused with cognitive skills such as study strategies, is practiced self-awareness. As Bonner and D’Agostino, “practice in self-monitoring, reviewing, and organizing decisions may help test-takers allocate resources effectively and avoid drawing conclusions without verification or reference to complete information.”

Filed under Educational Psychology, legal education

Driven to Distraction?

From the back of any classroom in law school, you’re likely to see students surfing the web, checking emails, or texting while a lecture or class discussion is going on. A recent National Law Journal article reports a study’s finding that 87% of the observed law students were using their computers for apparently non-class related purposes—playing solitaire, checking Facebook and looking at sports scores, and the like—for more than 5 minutes during class. The study by St. John’s Law Professor Jeff Sovern was published in a recent edition of the Louisville Law Review. 2 and 3L’s –not 1Ls–were the groups most likely to be engaged in non-class related activities.

Conventional wisdom and common sense tell us that media multitasking is bad for learning and instruction. For stretches of time, students in class aren’t paying attention to what’s happening in class. But it’s unclear whether the lack of engagement in the class is for any perceived good reasons. Perhaps the class discussion or portion of the lecture was perceived as boring or not relevant to what will be on a test.

Scientists have termed this student behavior to be “media multitasking,” and it isn’t confined to the classroom. Many students probably do this while studying. And media multitasking isn’t just a student problem—we all do it. Psychologist Maria Konnikova in her New Yorker blog writes that the internet is a like a “carnival barker” summoning us to take a look.

Konnikova points to a study that suggests a possible adverse side effect of heavy media multitasking. Ophir, Nass, and Wagner (2009) conducted an experiment where they asked the question: Are there any cognitive advantages or disadvantages associated with being a heavy media multitasker? This is one of the few studies that look at the impact of multitasking on academic performance.

In the experiment, participants were classified and assigned to two groups—heavy media multitaskers or light media multitaskers. Participants in the two groups had to make judgments about visual data such as colored shapes, letters or numbers. Ophir et al., found that when both groups were presented with distractors, heavy users were significantly slower to make judgments about the visual data than light users and that heavy users were more likely to make mistakes than the light users. Also heavy users had a more difficult time switching tasks than light users when the distractor condition was presented. The researchers explained the results with the theory that the light users had a more top-down executive control in filtering in relevant information and thus were less prone to being distracted by irrelevant stimuli. By contrast, heavy users had a more bottom-up approach of taking in all stimuli and thus were more likely to be taken off the wrong track by irrelevant stimuli.

In short, the researchers concluded that being a heavy media multitasker has adverse aftereffects. If you’re a heavy user, you’re more likely to be taking in irrelevant stimuli than if you are a light user. In other words, heavy media multitasking promotes the trait of being over-attentive to everything, relevant or irrelevant. That could mean that if you’re a heavy user, your lecture or book notes are more likely to contain irrelevant information – irrespective of whether you’re actually online when you were taking notes. It’s as though heavy media use has infected your working memory with a bad trait. Because the effects are on working memory, heavy media multitaskers should be advised to go back and deliberate over their notes, and revise accordingly.

But a note of caution. The study’s conclusions are a long way from being applicable to what actually happens in academic life. The study involved exposures to relevant and irrelevant visual and letter or number recognition stimuli. So it’s unclear whether the heavy user is equally distracted when she or he is presented with semantic information—textual or oral information that has meaning. And that’s an important qualification of the study’s conclusions because semantic information constitutes the bulk of what we work on as students. As far as I can tell, there is no such study where participants are asked to make judgments about semantic information.

Also the direction of causality is unclear. Does heavy multitasking make a person a poor self-regulator of attention? Or does heavy media multitasking happen because a person is a poor self-regulator?

Although the research is far from conclusive, we shouldn’t wait to act on what exists. Here are a few suggestions on what students and instructors can do.

• First, manage your distractions. Common sense dictates that being distracted is bad for studying—whether the source of the distraction is the internet, romantic relationships or television. Take a behavioral management approach. Refrain from using the internet, until you’ve spent some quality undistracted time with your studying or listening to what’s happening in class and then reward yourself with a limited amount of internet time – after class.

• Second, assess your internet use. Monitoring your use alone will have a reactive effect. Just being aware of what you’re doing will cut down on the amount of irrelevant stimuli you’re exposed to.

• Third, if you identify yourself as a moderate to heavy internet consumer of non-academic content while studying or in class, be more vigilant about the quality of your lecture and study notes. These notes are more likely to contain a lot of irrelevant material, and you’ll need to excise irrelevancies.

• Fourth, instructors take note that if they see their students tuning out in class, they should re-evaluate their lesson plans and classroom management to more fully engage the majority of their students. Banning the internet from the lecture halls won’t necessarily make students more engaged. If the class doesn’t engage the students, they will find other ways to tune out—with or without the internet.

Filed under Educational Psychology, legal education

George Miller’s Magical Number 7, Novice Law Students and Miller’s Real Legacy

Today’s NY Times Science section featured a story entitled Seven Isn’t the Magic Number for Short-Term Memory on psychologist’s George Miller classical paper on the limits of human’s short-term memory. His theory established in a 1956 article is that there is a numerical limit of 7 items that humans can retain in “short-term” memory. The gist of the NYT story is that the limit is quaint but outdated. So what’s interesting is that the same point in the Times article was made almost 21 years ago by prominent theorist Alan Baddley (1994) in The Magical Number Seven: Still Magic After All These Years. The point made in his article is that there isn’t strictly a limit of 7 on short term memory, and that there are many exceptions or qualifications to the limit of 7.

Short-term memory, in contrast to long-term memory, storage—is our workbench or working memory that we use in active processing of information. Short-term memory limits have a big impact on novices in a new field or endeavor—e.g., novice learners of the law. In fact, novice law students have greater difficulty reading case law and solving problems using case law because novices lack the automaticity in cognitive functioning that those with greater legal expertise have. Novice law students experience a kind of cognitive overload—as they have to exert great effort on mental-intensive processes to read and interpret legal cases and solve problems based on the cases they have read.

The NY Times blurb article misses the point about Miller’s legacy. The real legacy behind his theory is that it was important to moving psychology to information-processing models of human thinking. Miller’s article was published in the mid-1950’s when the prevailing learning theory was based on a behaviorism (learning based on stimulus and response). As Baddeley points out about Miller’s theory,

“Miller pointed the way ahead for the information-processing approach to cognition.”

That’s the real enduring magical legacy of Miller.

Filed under Educational Psychology